I added a new demo project called ContactForm.Sample at github.com/OviCrisan/ContactForm.Sample. It's just a sample solution with 2 projects, one to show a contact form with both C# and JS / AJAX, which then uses HTTP POST to a web API project, which saves data to a Postgres database. The web API (Contactform.Sample.Postgres) is build as a separate infrastructure project because I intend to add some more API projects for other databases, SQL and no-SQL.

Here's a simple diagram of the projects:

And the regular C# web form with Google reCAPTCHA (demo) enabled:

More technical details are added to readme files in the project source code, including details to run it with Docker compose from Docker containers.

By the way, the project Docker images are also available on Docker Hub here and here.

Comments are welcome. Thanks.

Sunday, November 24, 2019

Monday, November 18, 2019

Seagate external hard drive

Black Friday came earlier this year for me with a new Seagate 6TB external hard drive, for all videos and books scattered of different computers and laptops I use, at home and at work. All good with it so far but it's formatted with NTFS which doesn't quite work on Mac OS. After a bit of digging I found out that Seagate offers a free version of Paragon NTFS for Mac. Installed and tested. Nice.

Thursday, November 7, 2019

ContactForm github project

It's been a while since I wanted to post a personal project on GitHub, with few features I'm interested in: ASP.NET Core MVC and APIs, Azure DevOps, Azure Functions & AWS Lambda, as well as Docker images. Some other features will be covered in another project I'm working on, but let's keep that as a secret until available.

The project I'm presenting now it's called ContactForm and is available at github.com/OviCrisan/ContactForm (see the link on the right side bar). It's meant for a simple contact form processing page, which works with both HTTP POST form data as well as REST API JSON, on the same endpoint (the root of deployed URL). There is more in the readme.md files from the project, with this diagram trying to explain different components:

The core of it is a .NET Standard 2.0 library deployed to NuGet.org (nuget.org/packages/OviCrisan.ContactForm), which then is used in ContactForm.Web (web app + web API), ContactForm.AzFunc (Azure Function) and ContactForm.AWSLambda (AWS Lambda function). Some libraries are using older versions just because AWS Lambda uses .NET Core 2.1, and also at the time of writing this Azure Functions 3.0 are in 'preview' mode.

Depending on the settings in the config file or environment variables it enables email notification and/or webhook posting or REST API call. Also, optionally, you can enable Google reCAPTCHA v2 with visible checkbox. For this last option you need to register your own set of keys or use for testing Google provided samples, which you can find in the readme files in the project.

The web application is also deployed as a Docker image at hub.docker.com/r/OviCrisan/ContactFormWeb, read more here.

The core library and web app have some unit testing projects, but very minimal (using XUnit). Also provided Azure DevOps YAML file for core library continuous integration. Some other additions will be added soon.

Comments, suggestions and bugs reporting are welcome. Thanks in advance.

The project I'm presenting now it's called ContactForm and is available at github.com/OviCrisan/ContactForm (see the link on the right side bar). It's meant for a simple contact form processing page, which works with both HTTP POST form data as well as REST API JSON, on the same endpoint (the root of deployed URL). There is more in the readme.md files from the project, with this diagram trying to explain different components:

The core of it is a .NET Standard 2.0 library deployed to NuGet.org (nuget.org/packages/OviCrisan.ContactForm), which then is used in ContactForm.Web (web app + web API), ContactForm.AzFunc (Azure Function) and ContactForm.AWSLambda (AWS Lambda function). Some libraries are using older versions just because AWS Lambda uses .NET Core 2.1, and also at the time of writing this Azure Functions 3.0 are in 'preview' mode.

Depending on the settings in the config file or environment variables it enables email notification and/or webhook posting or REST API call. Also, optionally, you can enable Google reCAPTCHA v2 with visible checkbox. For this last option you need to register your own set of keys or use for testing Google provided samples, which you can find in the readme files in the project.

The web application is also deployed as a Docker image at hub.docker.com/r/OviCrisan/ContactFormWeb, read more here.

The core library and web app have some unit testing projects, but very minimal (using XUnit). Also provided Azure DevOps YAML file for core library continuous integration. Some other additions will be added soon.

Comments, suggestions and bugs reporting are welcome. Thanks in advance.

Wednesday, November 6, 2019

PostgreSQL 12 on Windows 10

I used previous versions of PostgreSQL, but I installed them with default Windows installer from the website which came with a Windows Service easy to start / stop. This last version 12 I installed with Chocolatey and I couldn't find any Windows Service to start.

So, back to documentation and googling. First I create environment variable PGDATA pointing to a newly created data folder, like C:\pgdata (easier than specify that folder in parameters). Then from C:\Program Files\PostgreSQL\12\bin I ran:

.\initdb C:\pgdata

.\pg_ctl start

.\createuser --interactive

.\createdb test1

Newly created user 'postgres' has the superuser role.

Additionally, you can create other users with passwords to be used by the applications.

Then, I prefer to use Valentina Studio which integrates nicely with Postgres.

So, back to documentation and googling. First I create environment variable PGDATA pointing to a newly created data folder, like C:\pgdata (easier than specify that folder in parameters). Then from C:\Program Files\PostgreSQL\12\bin I ran:

.\initdb C:\pgdata

.\pg_ctl start

.\createuser --interactive

.\createdb test1

Newly created user 'postgres' has the superuser role.

Additionally, you can create other users with passwords to be used by the applications.

Then, I prefer to use Valentina Studio which integrates nicely with Postgres.

Monday, November 4, 2019

Big Azure update today

There are a lot of announcements today from Microsoft Azure specially for Ignite event, some of most important ones in my opinion:

- Introducing Azure Arc - a set of technologies that unlocks new hybrid scenarios for customers by bringing Azure services and management to any infrastructure.

- Azure Data Share - a safe and secure service for sharing data with third-party organizations.

- Autopilot mode for provisioned throughput is now in preview - Cosmos DB should be automatically scalable, otherwise what's the point? Plus new v3 SDK.

- SQL Server 2019 is now generally available

- Azure SQL Database serverless - pay the compute by the seconds of actual use + storage costs

- Azure Functions - v3 preview + PowerShell support + Premium plan GA . I was working recently on a sample Azure Function project but decided to use v2 instead of preview version v3, for few reasons (for instance, to reuse the code with AWS Lambda function).

- Azure Database for PostgreSQL - Hyperscale (Citus) is now available

- Azure Kubernetes Service (AKS) cluster autoscaler

- Azure Spot VMs are now in Preview

- Visual Studio Online is now in preview - VS in the browser, everywhere, cool!

- New Azure API Management developer portal is now generally available

- Free Transport Layer Security (TLS) for Azure App Service is now in preview

- Azure Monitor Prometheus integration is now generally available

Thursday, September 26, 2019

Conditionally added XML element

On a project I'm working on we have to generate some XML documents with a pretty complex structure. In previous version we used XmlDocument, but the code is pretty verbose, so I checked XDocument alternative from Linq to XML.

This new solution works better (looks like is even faster), but I was looking for a simple solution to skip empty nodes. We didn't want <node></node> or <node />.

So in the end I tried something like this:

using System;

using System.Linq;

using System.Xml.Linq;

namespace ConsoleApp1

{

class Program

{

static void Main(string[] args)

{

var b3 = string.Empty; // "3"

XDocument x = new XDocument(

new XDeclaration("1.0", "utf-8", null),

new XElement("a",

new XElement("b1", "1"),

ElemString("b2", "2"),

ElemString("b3", b3),

new XElement("b4", string.Empty),

new XElement("b5", null),

ElemChildren("c", ElemString("c1", "11"), ElemString("c2", string.Empty)),

ElemChildren("d", ElemString("d1", string.Empty), ElemString("d2", string.Empty))

)

);

x.Save(Console.Out);

Console.ReadKey();

}

static XElement ElemChildren(string name, params object[] children) {

return children.Where( obj => obj != null).Any() ? new XElement(name, children) : null;

}

static XElement ElemString(string name, string val) {

return string.IsNullOrEmpty(val) ? null : new XElement(name, val);

}

}

}

which produces:

<?xml version="1.0" encoding="utf-8"?>

<a>

<b1>1</b1>

<b2>2</b2>

<b4></b4>

<b5 />

<c>

<c1>11</c1>

</c>

</a>

ElemChildren() was used to exclude a node having all children Null, while ElemString() is a simple ternary function (here's only for strings, most important part).

Notice the difference between <b4></b4> and <b5 />. Also notice that node <d> is missing completely because of all Null children nodes.

Notice the difference between <b4></b4> and <b5 />. Also notice that node <d> is missing completely because of all Null children nodes.

Monday, September 23, 2019

.NET Core 3.0 and .NET Core 3.1 LTS

New .NET Core 3.0 was just launched today at .NET Conf but I also see that new LTS version is announced:

Also don't forget that .NET 2.2 will be retired at the end of this year: ".NET Core 2.2 will go EOL on 12/23"

.NET Core 3.0 is a ‘current’ release and will be superseded by .NET Core 3.1, targeted for November 2019. .NET Core 3.1 will be a long-term supported (LTS) release (supported for at least 3 years). We recommend that you adopt .NET Core 3.0 and then adopt 3.1. It’ll be very easy to upgrade.Nice. Moving very fast, hard to catch up with all the new announced features.

Also don't forget that .NET 2.2 will be retired at the end of this year: ".NET Core 2.2 will go EOL on 12/23"

Wednesday, September 11, 2019

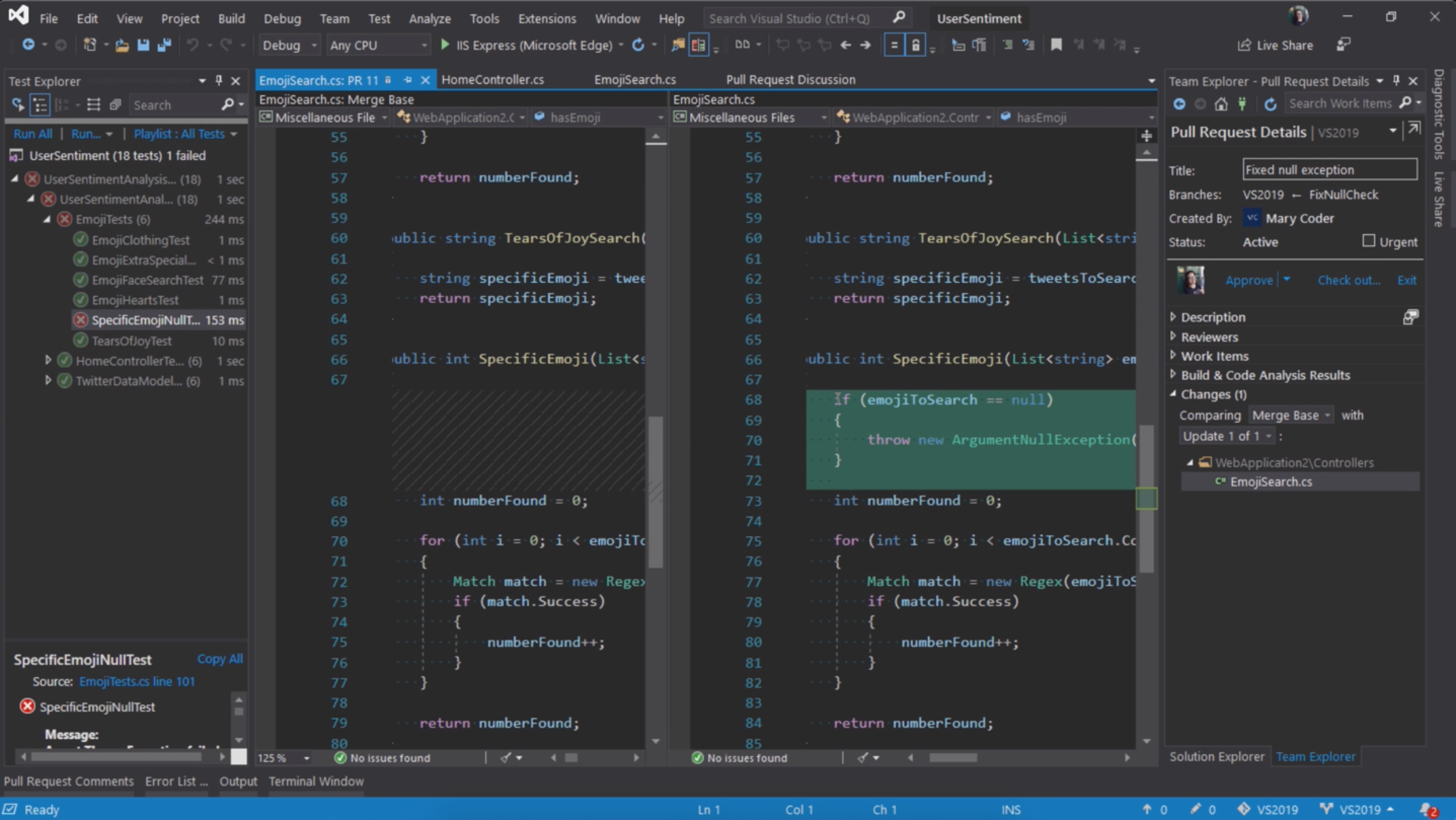

eShopOnWeb PR to allow basket items removal

I did a PR request on forked Microsoft's demo project eShopOnWeb to allow basket items removal by setting the quantity to zero. See the commit 70e009b.

Also, added 2 more tests (a unit and another integration test) for new functionality. Plus another small fix in some unit testing async functions to return Task instead of void.

This sample project is a good example of architectural principles, very well described in the eBook that comes with the project. I'm thinking to do some other contributions to this project, as coding exercises.

Also, added 2 more tests (a unit and another integration test) for new functionality. Plus another small fix in some unit testing async functions to return Task instead of void.

This sample project is a good example of architectural principles, very well described in the eBook that comes with the project. I'm thinking to do some other contributions to this project, as coding exercises.

Wednesday, September 4, 2019

.NET Core 3.0

Microsoft launched today .NET Core 3.0 preview 9, probably the last preview version before the official launch at .NET Conf, on Sept. 23rd.

They are also running local events for this launch, I already registered for one in Toronto, on Oct. 7th. See you there?

They are also running local events for this launch, I already registered for one in Toronto, on Oct. 7th. See you there?

Wednesday, June 26, 2019

Software Architecture for Developers by Simon Brown

A book mainly addressed to developers, especially to those who want to switch roles to software architecture, in an agile environment.

Read more on my LinkedIn article.

Read more on my LinkedIn article.

Wednesday, June 19, 2019

Designing Autonomous Teams and Services

A review of Designing Autonomous Teams and Services book by Scott Millett and Nick Tune (O'Reilly, 2017)

Read it on my LinkedIn profile article.

Tuesday, June 18, 2019

SQL SELECT / UPDATE formatting trick

Many times I'm using this very simple SQL formatting for both SELECT & UPDATE (usually with MS SQL Server using SQL Server Management Studio or some other tool, interactively):

SELECT Count(*) -- TOP 20 A.*

-- UPDATE A SET A.Field1=B.Field1

FROM A INNER JOIN B ON A.ID=B.ID

WHERE <condition>

By default, all query is just a SELECT Count(*) which doesn't change anything but give you an idea of the number of records selected or updated later.

If I comment out the first part to something like this:

SELECT /*Count(*) --*/ TOP 20 A.*

it gives me some records that will be updated.

Then just undo the change and select the query from the UPDATE and execute the selected text. Also, the number of records updated should match the number displayed with Count(*).

It's like 3-in-1, which can easily go in comments of the ticketing system or emails.

SELECT Count(*) -- TOP 20 A.*

-- UPDATE A SET A.Field1=B.Field1

FROM A INNER JOIN B ON A.ID=B.ID

WHERE <condition>

By default, all query is just a SELECT Count(*) which doesn't change anything but give you an idea of the number of records selected or updated later.

If I comment out the first part to something like this:

SELECT /*Count(*) --*/ TOP 20 A.*

it gives me some records that will be updated.

Then just undo the change and select the query from the UPDATE and execute the selected text. Also, the number of records updated should match the number displayed with Count(*).

It's like 3-in-1, which can easily go in comments of the ticketing system or emails.

Achieving DevOps: A Novel About Delivering the Best of Agile, DevOps, and Microservices

A book by Dave Harrison and Knox Lively.

If you are interested in modern software development, with 2019 methodologies, practices and tools, especially using Microsoft technology stack, this is the book to read. It may have something for you, as it covers a lot of ground of Agile & DevOps, but also everything else that comes with them, chapter by chapter, section by section: teams, processes, tools, testing, automation, infrastructure as code, configurations, continuous integration, continuous delivery & deployment, life / work balance, and more.

Continue reading on my LinkedIn profile article.

If you are interested in modern software development, with 2019 methodologies, practices and tools, especially using Microsoft technology stack, this is the book to read. It may have something for you, as it covers a lot of ground of Agile & DevOps, but also everything else that comes with them, chapter by chapter, section by section: teams, processes, tools, testing, automation, infrastructure as code, configurations, continuous integration, continuous delivery & deployment, life / work balance, and more.

Continue reading on my LinkedIn profile article.

Saturday, June 15, 2019

Accelerate: The Science of Lean Software and DevOps

A review of highly recommended book Accelerate: The Science of Lean Software and DevOps (2018), by Nicole Forsgren PhD, Jez Humble and Gene Kim.

It's based on Puppet State of DevOps Reports between 2015 and 2018, which I also recommend (read 2018 report here)

If you think of using Agile & DevOps practices to accelerate software development and continuous delivery this book is a 'must-read'.

Read full article on my LinkedIn.

Sunday, May 19, 2019

Agile story points for bugs

Asking if bugs should have story points is a bit controversial, although most answers are 'yes, they should have'. Jira, for instance, doesn't show by default story points field for 'bugs', for that very reason not to assign some, but the question how to do it was asked many years ago, with simple answers.

If you consider definition from Agile Alliance and consider points as a metric for velocity, than yes, it makes sense.

It was also asked many times on various boards and forums, like this one on StackExchange, from where I personally like Joppe's answer:

Regardless of many 'yes' answers there are also a lot of people, like me, who thinks the answer should be 'no', for a very important reason: 1st principle of Agile, which states

You promised something or a specific feature was expected by customers or business which was not yet delivered. Of course it takes time to make it right, maybe some aspects were way too complex and discovered only later, but still a broken promise.

That's exactly the 'technical debt' people are talking about, and ways to reduce it is exactly a responsible way to find and fix bugs. The theory says they should reduce their numbers over time, but is this right in practice? How you reduce them is you are treating bugs like feature and award points to them which increases velocity? A reduced velocity because of bugs is an immediate measure of the quality of work, and can be easily charted over time. How is your trend for bug fixing?

If you consider definition from Agile Alliance and consider points as a metric for velocity, than yes, it makes sense.

It was also asked many times on various boards and forums, like this one on StackExchange, from where I personally like Joppe's answer:

You should not give points to bug resolution. Consider the fact that the bug comes forth from a story where the developers already earned points for completing the story. It should not receive points again where it actually shouldn't have earned the points to begin with.Bug fixing should have a negative effect on velocity. Otherwise dropping quality ends up being rewarded with an unaffected or even increased velocity!

Regardless of many 'yes' answers there are also a lot of people, like me, who thinks the answer should be 'no', for a very important reason: 1st principle of Agile, which states

Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.Let's ask a mode difficult (related) question: Are bugs fixing added value to your customers (or business)? The simple answer is yes, but when you look deeper into it don't you think those bugs were some broken promises or expectations in the first place? Bugs don't add value, on the contrary.

You promised something or a specific feature was expected by customers or business which was not yet delivered. Of course it takes time to make it right, maybe some aspects were way too complex and discovered only later, but still a broken promise.

That's exactly the 'technical debt' people are talking about, and ways to reduce it is exactly a responsible way to find and fix bugs. The theory says they should reduce their numbers over time, but is this right in practice? How you reduce them is you are treating bugs like feature and award points to them which increases velocity? A reduced velocity because of bugs is an immediate measure of the quality of work, and can be easily charted over time. How is your trend for bug fixing?

Saturday, May 11, 2019

Agile team leaders and DevOps

I'm currently reading Microsoft's Open edX course AZ-400.7 Designing a DevOps Strategy where, after an extensive presentation of Agile manifesto, principles and methodologies, in chapter 2 Planning Effective Code Reviews says:

Searching Google for 'agile team leader' will render various pages where people is explaining that's a bad idea and shouldn't happen in Agile. I beg to differ, but that would be another story, someday.

On the other hand, how the whole DevOps new ideas embrace agility? I see some other pages comparing the two, and many times there is a tiny 'vs' between them, "Agile vs DevOps", suggesting them as competing terms.

It's true that Agile focuses mainly between communications and relationships between business (stakeholders and product owner) and development team, while DevOps focuses on that dev team one step further, seeing them as proper software development team and operations team (each with its own goals).

And is also true that DevOps is agile (with lower case "a"), is even mentioned somehow ("continuous delivery") in first principle of Agile Manifesto, but where in Agile methodology talks about 'DevOps'? DevOps is more than just a simple notion of continuous delivery, it encompasses principles, processes and tools dedicated to it. Maybe we'll see more of it in future updates of Agile methodologies.

Until then, I'm reading more of something else I come across: Disciplined Agile (DA). One of its layers is clearly named 'Disciplined DevOps'. It looks like DA is more agile than Agile. One thing I like about it is that has a holistic approach for entire agile enterprise, not just IT development department. Another is that recognize some other important (primary) roles, like architecture owner, team leaders or DevOps, as well as supporting roles, all within an agile framework or toolkit, as they name it.

I'd definitely read more about DA[D], as it looks very appealing to me, especially in the context of DevOps.

Team leaders need to establish and foster an appropriate culture across their teams.What team leaders in Agile? Agile roles are clearly defined (in the context of Scrum methodology, which in practice almost always go hand in hand with Agile), and there is no 'team leader' in it, rather the entire development team is empowered and entrusted to self-organize itself.

Searching Google for 'agile team leader' will render various pages where people is explaining that's a bad idea and shouldn't happen in Agile. I beg to differ, but that would be another story, someday.

On the other hand, how the whole DevOps new ideas embrace agility? I see some other pages comparing the two, and many times there is a tiny 'vs' between them, "Agile vs DevOps", suggesting them as competing terms.

It's true that Agile focuses mainly between communications and relationships between business (stakeholders and product owner) and development team, while DevOps focuses on that dev team one step further, seeing them as proper software development team and operations team (each with its own goals).

And is also true that DevOps is agile (with lower case "a"), is even mentioned somehow ("continuous delivery") in first principle of Agile Manifesto, but where in Agile methodology talks about 'DevOps'? DevOps is more than just a simple notion of continuous delivery, it encompasses principles, processes and tools dedicated to it. Maybe we'll see more of it in future updates of Agile methodologies.

Until then, I'm reading more of something else I come across: Disciplined Agile (DA). One of its layers is clearly named 'Disciplined DevOps'. It looks like DA is more agile than Agile. One thing I like about it is that has a holistic approach for entire agile enterprise, not just IT development department. Another is that recognize some other important (primary) roles, like architecture owner, team leaders or DevOps, as well as supporting roles, all within an agile framework or toolkit, as they name it.

I'd definitely read more about DA[D], as it looks very appealing to me, especially in the context of DevOps.

Friday, April 26, 2019

AZ-102 exam

I've just passed exam AZ-102, Microsoft Azure Administrator Certification Transition, which I wanted to do it for some time, considering that will be retired on June 30th.

It covers a lot of Azure administration, especially VNets, AAD, monitoring and migrations. It was interesting to read about newly introduced features, since my previous 70-533, which you need to have in order to take this transition exam instead of full AZ-100 / AZ-101. For instance, I wasn't sure if they will use new Azure PowerShell with 'Az' prefix or regular 'AzureRm' - it was the later.

Now I'm ready for the next ones in the pipeline :)

It covers a lot of Azure administration, especially VNets, AAD, monitoring and migrations. It was interesting to read about newly introduced features, since my previous 70-533, which you need to have in order to take this transition exam instead of full AZ-100 / AZ-101. For instance, I wasn't sure if they will use new Azure PowerShell with 'Az' prefix or regular 'AzureRm' - it was the later.

Now I'm ready for the next ones in the pipeline :)

Monday, April 15, 2019

NGINX with custom HTTPS local test domain on Mac OS

This is more a note to myself, because I'm always forgetting the easiest way to generate a self signed trusted SSL wild-card certificate for local test domain, like sample.test. You can use OpenSSL tool to generate it like this:

openssl req -x509 -newkey rsa:2048 -sha256 -days 3650 -nodes -keyout sample.test.key -out sample.test.crt -subj /CN=*.sample.test

You can also add it to your Mac OS Keychain Access with this:

sudo security add-trusted-cert -d -r trustRoot -k /Library/Keychains/System.keychain sample.test.crt

But in my case was still not trusted, so it didn't work fine in Google Chrome.

The easiest way is to use open-source mkcert. You can install it with brew on Mac OS or download the latest release version for your OS. Just follow the docs to install it and generate a new certificate, which will be also automatically properly registered:

mkcert sample.test "*.sample.test" localhost 127.0.0.1

After renaming the files as sample.test.key and sample.test.crt, copying them in NGINX folder change nginx.conf file (or an included file) to something like this:

upstream sample-test {

server localhost:5001;

server localhost:5002;

}

server {

listen *:80;

server_name www.sample.test sample.test;

add_header Strict-Transport-Security max-age=15768000;

return 301 https://$host$request_uri;

}

server {

listen *:443 ssl;

server_name www.sample.test sample.test;

ssl_certificate sample.test.crt;

ssl_certificate_key sample.test.key;

ssl_protocols TLSv1.1 TLSv1.2;

ssl_prefer_server_ciphers on;

ssl_ciphers "EECDH+AESGCM:EDH+AESGCM:AES256+EECDH:AES256+EDH";

ssl_ecdh_curve secp384r1;

ssl_session_cache shared:SSL:10m;

ssl_session_tickets off;

add_header Strict-Transport-Security "max-age=63072000; includeSubdomains; preload";

add_header X-Frame-Options DENY;

add_header X-Content-Type-Options nosniff;

access_log /usr/local/etc/nginx/logs/access.log;

location / {

proxy_pass http://sample-test;

}

}

This uses NGINX SSL termination, so traffic from NGIX to application server is not encrypted, and uses NGINX upstream to load balance HTTP traffic to multiple (2) web application instances. All traffic on port 80 (HTTP) is automatically redirected to port 443 (HTTPS), which uses newly generated certificates.

openssl req -x509 -newkey rsa:2048 -sha256 -days 3650 -nodes -keyout sample.test.key -out sample.test.crt -subj /CN=*.sample.test

You can also add it to your Mac OS Keychain Access with this:

sudo security add-trusted-cert -d -r trustRoot -k /Library/Keychains/System.keychain sample.test.crt

But in my case was still not trusted, so it didn't work fine in Google Chrome.

The easiest way is to use open-source mkcert. You can install it with brew on Mac OS or download the latest release version for your OS. Just follow the docs to install it and generate a new certificate, which will be also automatically properly registered:

mkcert sample.test "*.sample.test" localhost 127.0.0.1

After renaming the files as sample.test.key and sample.test.crt, copying them in NGINX folder change nginx.conf file (or an included file) to something like this:

upstream sample-test {

server localhost:5001;

server localhost:5002;

}

server {

listen *:80;

server_name www.sample.test sample.test;

add_header Strict-Transport-Security max-age=15768000;

return 301 https://$host$request_uri;

}

server {

listen *:443 ssl;

server_name www.sample.test sample.test;

ssl_certificate sample.test.crt;

ssl_certificate_key sample.test.key;

ssl_protocols TLSv1.1 TLSv1.2;

ssl_prefer_server_ciphers on;

ssl_ciphers "EECDH+AESGCM:EDH+AESGCM:AES256+EECDH:AES256+EDH";

ssl_ecdh_curve secp384r1;

ssl_session_cache shared:SSL:10m;

ssl_session_tickets off;

add_header Strict-Transport-Security "max-age=63072000; includeSubdomains; preload";

add_header X-Frame-Options DENY;

add_header X-Content-Type-Options nosniff;

access_log /usr/local/etc/nginx/logs/access.log;

location / {

proxy_pass http://sample-test;

}

}

This uses NGINX SSL termination, so traffic from NGIX to application server is not encrypted, and uses NGINX upstream to load balance HTTP traffic to multiple (2) web application instances. All traffic on port 80 (HTTP) is automatically redirected to port 443 (HTTPS), which uses newly generated certificates.

Tuesday, April 9, 2019

Google Cloud Run

An interesting announcement today from Google: Cloud Run. See their details and pricing, interestingly enough, rounded up to the nearest 100 millisecond.

My first impression is that Google is offering something similar to Azure Container Instances, which has the same idea of deploying workloads in a serverless way using containers, paid by the second (Azure) or hundredth millisecond (GCP).

One good thing about GCP offer is the free quota of Cloud Run, which has 2 million free requests, excellent for trying them out. On the down side they don't support .NET Core, only other languages, like Node.js, Python or Golang.

Read the full announcement for all details and features, like the full stack of serverless apps.

This is something I'd definitely want to try.

My first impression is that Google is offering something similar to Azure Container Instances, which has the same idea of deploying workloads in a serverless way using containers, paid by the second (Azure) or hundredth millisecond (GCP).

One good thing about GCP offer is the free quota of Cloud Run, which has 2 million free requests, excellent for trying them out. On the down side they don't support .NET Core, only other languages, like Node.js, Python or Golang.

Read the full announcement for all details and features, like the full stack of serverless apps.

This is something I'd definitely want to try.

Tuesday, April 2, 2019

Visual Studio 2019

If you are following Microsoft's news you know that they launched today Visual Studio 2019. I installed it some weeks ago and worked fine on Windows, except the hiccup I had on Mac with Preview 3. Download it from here.

Watching some videos and reading some articles on VS 2019 I found out some interesting stuff I wasn't aware before:

See the whole list of new features.

Watching some videos and reading some articles on VS 2019 I found out some interesting stuff I wasn't aware before:

- Live Share works for VS 2017 and Visual Studio Code, just install the appropriate plugins. Super handful.

- Find out about solution filters: load only the projects you need immediately, with easy loading dependencies with a click of a button (notice "unloaded" projects):

- Even better, you can save that list as a *.slnf file, and reopen easily next time.

- CodeLens for Community version.

- ML enhanced search. Very easy to add a class or interface from the search textbox (CTRL-Q).

- IntelliCode: analyze your code (ML) for improved IntelliSense suggestions (notice the start in front of frequently used properties or methods):

- Integrated code reviews (pull requests):

See the whole list of new features.

Sunday, March 31, 2019

.NET Core decorator pattern with Lamar

Two days ago I read some news about Lamar 3.0, new version of Lamar dependency injection library meant to replace the well established but closing down StructureMap. Because yesterday I tested decorators with Scrutor, I wanted to try something similar with Lamar, a chance to use it for the first time.

Docs are very minimal, and took me a while to understand that I must add Shouldly, which I didn't use before either. Of course I didn't read the entire documentation before trying Lamar, so their example didn't work for me. Eventually I get back to StructureMap decorators and use it similarly like this:

This way it seems to work fine, but still some work on the documentation should be done, I think. But I promise I'll try a bit more their GitHub test samples, which hopefully will clarify many aspects.

One thing I read about it and liked, was that it plays nicely with default .NET Core dependency injection implementing IServiceCollection.

Docs are very minimal, and took me a while to understand that I must add Shouldly, which I didn't use before either. Of course I didn't read the entire documentation before trying Lamar, so their example didn't work for me. Eventually I get back to StructureMap decorators and use it similarly like this:

This way it seems to work fine, but still some work on the documentation should be done, I think. But I promise I'll try a bit more their GitHub test samples, which hopefully will clarify many aspects.

One thing I read about it and liked, was that it plays nicely with default .NET Core dependency injection implementing IServiceCollection.

.NET Core decorator pattern with DI and Scrutor

I few days ago I tested .NET Fiddle with some .NET code, but since then I found out its biggest limitation: doesn't work with .NET Core. Only later I noticed the .NET Framework version is 4.5, but most importantly it can't be changed.

So, my next test for decorator pattern failed, as I wanted to use Scrutor library with default .NET Core Dependency Injection.

Consequently, here's the Gist from GitHub

As you can see, using this small library, is very easy to add new functionality to existing objects (using Decorate() extension DI method), expanding them, especially when we don't have access to source code to change those objects directly. Besides Decorator it also can scan assemblies (which in fact is the main functionality of the library), working with default .NET Core dependency injection.

In this case I simulated some sort of logging (on console) after interface methods call, but can be used for many other objectives, like adding caching, authorization (before calls), notifications, and so on.

Saturday, March 30, 2019

ASP.NET Core Identity with MediatR

A good way to play with ASP.NET Core 3.0 Identity (using VS 2019 preview) and MediatR is this sample project on GitHub.

MediatR is a small but very useful utility library which main goal is to implement Mediator pattern, but is more than that. For instance comes with ASP.NET Core Dependency Injection extensions, pipelines (or behaviors) that implement decorator pattern, and few other goodies. Simply speaking, it may be a very first step toward CQRS, to split up queries and commands. In many cases is used more like a command pattern, I think.

In my case I wanted to use MediatR to extract from identity Razor pages all references to Microsoft.AspNetCore.Identity and move the code in some handlers. This should help unit testing because page's OnGetAsync() or OnPostAsync() are only calling mediator Send() method which does the job, without any reference to Identity's SigInManager class.

To start with, just create a new ASP.NET Core web application with local identity accounts, so the entire back-end to identities are already created. Then I run the database migration:

dotnet ef database update

If you ran the application at this stage it should work, so you should be able to register a new account and login. By default, ASP.NET uses default identity UI which hides from us all details. If we want to customize the login, for instance, as we do, we need to scaffold the pages and manually change them in the project, see this docs link:

dotnet tool install -g dotnet-aspnet-codegenerator

dotnet add package Microsoft.VisualStudio.Web.CodeGeneration.Design

dotnet restore

To actually generate the pages use this:

dotnet aspnet-codegenerator identity -dc SampleMediatR.Data.ApplicationDbContext --files "Account.Register;Account.Login;Account.Logout"

Newly generated login, logout and registration pages are available under /Areas/Identity/Pages/Account:

At this stage, if you want to run the application is important to comment out in Startup.cs in ConfigureServices() the call of AddDefaultUI() because we don't need it anymore (once scaffolded in the app):

services.AddDefaultIdentity<IdentityUser>()

//.AddDefaultUI(UIFramework.Bootstrap4)

.AddEntityFrameworkStores<ApplicationDbContext>();

Actually, scaffolding operation created a new file in the root of the project called ScaffoldingReadme.txt with instruction on how to set it up.

Also, I created a new folder called 'MediatR' under /Areas/Identity for classes needed by MediatR library, but first let's add those references:

dotnet add package MediatR

dotnet add package MediatR.Extensions.Microsoft.DependencyInjection

Then add it for dependency injection in Startup.cs ConfigureServices():

services.AddMediatR();

Now we are ready to implement query & handler classes needed by MediatR. For instance MediatR/LoginGet.cs has 2 classes: query (request) called "LoginGet" and handler called "LoginGetHandler". First one wraps the data passed to the handler, while the second is the handler doing the job in method called Handler(), called automatically by the MediatR library.

public class LoginGet: IRequest<IEnumerable<AuthenticationScheme>> { }

public class LoginGetHandler : IRequestHandler<LoginGet, IEnumerable<AuthenticationScheme>>

{

private readonly SignInManager<IdentityUser> _signInManager;

private readonly IHttpContextAccessor _httpContextAccessor;

public LoginGetHandler(SignInManager<IdentityUser> signInManager, IHttpContextAccessor httpContextAccessor)

{

_signInManager = signInManager;

_httpContextAccessor = httpContextAccessor;

}

public async Task<IEnumerable<AuthenticationScheme>> Handle(LoginGet request, CancellationToken cancellationToken)

{

await _httpContextAccessor.HttpContext.SignOutAsync(IdentityConstants.ExternalScheme);

return await _signInManager.GetExternalAuthenticationSchemesAsync();

}

}

Notice couple things:

ExternalLogins = (await _mediator.Send(new LoginGet())).ToList();

All the previous logic that didn't belong to the OnGetAsync() controller was moved to the MediatR handler, and similarly for OnPostAsync(), as well as Logout.cshtml.cs OnPostAsync().

See the code for other details.

MediatR is a small but very useful utility library which main goal is to implement Mediator pattern, but is more than that. For instance comes with ASP.NET Core Dependency Injection extensions, pipelines (or behaviors) that implement decorator pattern, and few other goodies. Simply speaking, it may be a very first step toward CQRS, to split up queries and commands. In many cases is used more like a command pattern, I think.

In my case I wanted to use MediatR to extract from identity Razor pages all references to Microsoft.AspNetCore.Identity and move the code in some handlers. This should help unit testing because page's OnGetAsync() or OnPostAsync() are only calling mediator Send() method which does the job, without any reference to Identity's SigInManager class.

To start with, just create a new ASP.NET Core web application with local identity accounts, so the entire back-end to identities are already created. Then I run the database migration:

dotnet ef database update

If you ran the application at this stage it should work, so you should be able to register a new account and login. By default, ASP.NET uses default identity UI which hides from us all details. If we want to customize the login, for instance, as we do, we need to scaffold the pages and manually change them in the project, see this docs link:

dotnet tool install -g dotnet-aspnet-codegenerator

dotnet add package Microsoft.VisualStudio.Web.CodeGeneration.Design

dotnet restore

To actually generate the pages use this:

dotnet aspnet-codegenerator identity -dc SampleMediatR.Data.ApplicationDbContext --files "Account.Register;Account.Login;Account.Logout"

Newly generated login, logout and registration pages are available under /Areas/Identity/Pages/Account:

At this stage, if you want to run the application is important to comment out in Startup.cs in ConfigureServices() the call of AddDefaultUI() because we don't need it anymore (once scaffolded in the app):

services.AddDefaultIdentity<IdentityUser>()

//.AddDefaultUI(UIFramework.Bootstrap4)

.AddEntityFrameworkStores<ApplicationDbContext>();

Actually, scaffolding operation created a new file in the root of the project called ScaffoldingReadme.txt with instruction on how to set it up.

Also, I created a new folder called 'MediatR' under /Areas/Identity for classes needed by MediatR library, but first let's add those references:

dotnet add package MediatR

dotnet add package MediatR.Extensions.Microsoft.DependencyInjection

Then add it for dependency injection in Startup.cs ConfigureServices():

services.AddMediatR();

Now we are ready to implement query & handler classes needed by MediatR. For instance MediatR/LoginGet.cs has 2 classes: query (request) called "LoginGet" and handler called "LoginGetHandler". First one wraps the data passed to the handler, while the second is the handler doing the job in method called Handler(), called automatically by the MediatR library.

public class LoginGet: IRequest<IEnumerable<AuthenticationScheme>> { }

public class LoginGetHandler : IRequestHandler<LoginGet, IEnumerable<AuthenticationScheme>>

{

private readonly SignInManager<IdentityUser> _signInManager;

private readonly IHttpContextAccessor _httpContextAccessor;

public LoginGetHandler(SignInManager<IdentityUser> signInManager, IHttpContextAccessor httpContextAccessor)

{

_signInManager = signInManager;

_httpContextAccessor = httpContextAccessor;

}

public async Task<IEnumerable<AuthenticationScheme>> Handle(LoginGet request, CancellationToken cancellationToken)

{

await _httpContextAccessor.HttpContext.SignOutAsync(IdentityConstants.ExternalScheme);

return await _signInManager.GetExternalAuthenticationSchemesAsync();

}

}

Notice couple things:

- LoginGet class implements MediatR's IRequest interface with type IEnumerable<AuthenticationScheme>, which is exactly the type returned by Handle() method. In this case the class doesn't need any properties (but see some in LoginPost.cs).

- In LoginGetHandler() constructor is injected from DI both SignInManager and HttpContextAccessor (which was added in Startup.cs with services.AddHttpContextAccessor(), just to get access to HttpContext in a safe way).

- Handle() function uses SignInManager and HttpContextAccessor to call necessary functions.

ExternalLogins = (await _mediator.Send(new LoginGet())).ToList();

All the previous logic that didn't belong to the OnGetAsync() controller was moved to the MediatR handler, and similarly for OnPostAsync(), as well as Logout.cshtml.cs OnPostAsync().

See the code for other details.

Monday, March 25, 2019

Visitor pattern on .NET Fiddle

A few days ago I discovered .Net Fiddle which I wanted to try with something, so I tested with 2 samples for visitor pattern: dotnetfiddle.net/KLQ3i4 and dotnetfiddle.net/yLYvY9 . Cool.

A very convenient way to play with C# code, run it in the browser and share it online. See my profile.

A very convenient way to play with C# code, run it in the browser and share it online. See my profile.

Saturday, March 23, 2019

Class diagrams refresher for design patterns in C#

We all know the concept of design patterns, as a well known, reusable solution for various software design problems. We may have read the books, including the famous Design Patterns: Elements of Reusable Object-Oriented Software, but also others, or checked sites like OODesign.com or DOFactory.com, especially for C#.

One mistake a think is happening many times is to treat all those patterns equally, when some of them are used way more often than others. My recommendation is to learnt them that way: start with something you may actually use, and add more when needed, in time. Other mistake is to consider only those listed in that book or websites mentioned, forgetting others described in other sources (see the list on Wikipedia is much longer).

However, when comes to learn those patterns my suggestion is to focus on UML class diagrams provided.

Let's take an example of a behavioural pattern that's very likely to be used in complex architectures: Mediator. Here's the UML diagram from Wikipedia:

(Actually the other image is much better because has more information and uses the correct relationship arrows instead of these non-standard, but also include sequence diagram which a tried to avoid for now, for simplicity)

You also have the C# code, as an example, but may question to you is: what's easier to read, diagram or C# code? I'm pretty sure many will answer the latter, but why? UML has many objectives in scope, some of them are obsolete these days like using CASE tools to generate code, but don't forget the main objective - to draw a picture that replace 1000 words. Just seeing that diagram should be clear for many developers, because that's the meaning of it, to simplify communications between developers.

I was looking for a good UML class diagram refresher with significant code example in C# and because I couldn't find one, here's my attempt to do one. Some inspiration comes from Agile Principles, Patterns, and Practices in C# by Robert C. Martin and Micah Martin (see chapters 13 and 19).

Class diagrams (made on draw.io) have a name, attributes (fields or properties) and messages (methods):

Which in C# it may be:

public class ThingA {

public int attribute1 {get; set;}

private string attribute2;

public int Message1() { /* ... */ }

protected bool Message2(string s) { /* ... */ }

private void Message3(IEnumerable<int>) { /* ... */ }

}

public abstract class AbstractThingA {

public virtual int attribute1 {get; set;}

public abstract int Message1();

}

public interface IThingA {

public int attribute1 {get; set;}

public int Message1();

}

Notice here:

Note these implementations are not mandatory this way, you may choose similar solutions with the same results.

Other important details:

That's all information we can extract from this diagram, for some other details we'd need some other diagrams, like a sequence diagram. Also, we considered some best practices for C#, like creating only public setters. In ConcreteMediator we have access to collegue1 & collegue2 private properties which can be used from whatever functionality we implement in that mediator class.

On the website you can see a full C# implementation.

Getting used to "read" class diagrams may help understanding and implementing design patterns, because I think it's easier to remember differences and details of various patterns graphically instead of the implementation code. Also, in many cases is more important to understand the classes, interfaces and relationships between them instead of some kind of implementation, which can slightly vary from project to project. For instance, you can use public properties or fields, or constructor injection on how the objects are created and passed around. Even for mediator example above there may be other solutions that does similar functionality, but the pattern itself is described by the class diagram, not by implementation details.

One mistake a think is happening many times is to treat all those patterns equally, when some of them are used way more often than others. My recommendation is to learnt them that way: start with something you may actually use, and add more when needed, in time. Other mistake is to consider only those listed in that book or websites mentioned, forgetting others described in other sources (see the list on Wikipedia is much longer).

However, when comes to learn those patterns my suggestion is to focus on UML class diagrams provided.

Let's take an example of a behavioural pattern that's very likely to be used in complex architectures: Mediator. Here's the UML diagram from Wikipedia:

(Actually the other image is much better because has more information and uses the correct relationship arrows instead of these non-standard, but also include sequence diagram which a tried to avoid for now, for simplicity)

You also have the C# code, as an example, but may question to you is: what's easier to read, diagram or C# code? I'm pretty sure many will answer the latter, but why? UML has many objectives in scope, some of them are obsolete these days like using CASE tools to generate code, but don't forget the main objective - to draw a picture that replace 1000 words. Just seeing that diagram should be clear for many developers, because that's the meaning of it, to simplify communications between developers.

I was looking for a good UML class diagram refresher with significant code example in C# and because I couldn't find one, here's my attempt to do one. Some inspiration comes from Agile Principles, Patterns, and Practices in C# by Robert C. Martin and Micah Martin (see chapters 13 and 19).

Class diagrams (made on draw.io) have a name, attributes (fields or properties) and messages (methods):

Which in C# it may be:

public class ThingA {

public int attribute1 {get; set;}

private string attribute2;

public int Message1() { /* ... */ }

protected bool Message2(string s) { /* ... */ }

private void Message3(IEnumerable<int>) { /* ... */ }

}

public abstract class AbstractThingA {

public virtual int attribute1 {get; set;}

public abstract int Message1();

}

public interface IThingA {

public int attribute1 {get; set;}

public int Message1();

}

Notice here:

- Access modifiers (visibility in UML): '+' means 'public', '-' means 'private' and '#' means protected

- Abstract classes are denoted by italics font (used on DOFactory.com, for instance), or may use '<<abstract>>' stereotype;

- For interfaces you can use '<<interface>>' stereotype (above interface name) or just prefix it it 'I' (my preferred method);

- Constants may be uppercase and static elements underlined (not used here);

There may be different preferences when implementing class diagrams, but in C# in think best option is to implement private attributes as class fields and public attributes as properties. Also, up to you if in abstract classes you implement a method (or property / field) as abstract or virtual (be aware of the difference), which is just an implementation detail.

This is easy, right? Now let's continue with relationships between classes and interfaces which needs to be clearly understood in order to 'read' effectively UML class diagrams. Here we have a few options:

- Inheritance (----|>) - ThingA class inherits from AbstractThingA:

public abstract class AbstractThingA { /* .. */ }

public class ThingA: AbstractThingA { /* .. */ }

- Implementation or realization (- - - -|>) - ThingA class implements interface IThingA:

public interface IThingA { /* .. */ }

public class ThingA: IThingA { /* .. */ }

- Association (---->) - class ThingA uses class ThingB. There are various ways to 'use' a class like :

- A local variable in a method in an object of type ThingA:

public class ThingA {

public int Message1() {

ThingB b = new ThingB();

return b.MessageB1();

}

}

- An object of type ThingB being parameter in a method in ThingB object:

public class ThingA {

public int Message1(ThingB b) {

return b.MessageB1();

}

}

- Other option may be returning an object of type ThingB from a method in ThingA. These differences may be specified with stereotypes above the arrow, like respectively "<<local>>", "<<parameter>>", or "<<create>>".

- Aggregation ( <>----->) is a special kind of association when class ThingA "has an" object of ThingB, but ThingB object may exists on its own:

public class ThingA {

private ThingB b;

public ThingA(ThingB b) {

this.b = b;

}

// then use b.MessageB1()

}

- Composition ( <|>----->) is yet another kind of association when class ThingA "has an" object of ThingB, but ThingB object may not exists on its own (like the part may only make sense in the context of a whole object):

public class ThingA {

private ThingB b = new ThingB();

// then use b.MessageB1()

}

- Dependency ( - - - - >) - usually means "everything else", when an object of type ThingB is used somehow by an object of type ThingA, other than the relationships described above. It's a weaker relationship, but still included in the class diagram.

Note these implementations are not mandatory this way, you may choose similar solutions with the same results.

Other important details:

- Multiplicity - like 0 (when no instance of the dependent object may exists), 1 (exactly 1 instance of dependent object exists), 0..1 (no or 1 instance exists), * or 0..* (any number of instances may exists, usually means some sort of collection):

class ThingA {

private ThingB objB;

} - Attribute Name - on the arrow we can specify the name of the property holding the dependent class ("collB" here):

class ThingA {

private List<ThingB> collB;

} - Association Qualifier - on the source class we have some key or collection to keep track of dependent object ("keyB" here):

class ThingA {

private string keyB;

public ThingB {

get { return new ThingB(keyB);}

}

}

After all these details let's see the Mediator pattern as described on DOFactory.com website:

Is it now a bit clearer that Mediator is an abstract class having ConcreteMediator as derived class, and Colleague abstract class has a property called 'mediator' (of type Mediator) having 2 derived classes, ConcreteCollegue1 and ConcreteCollegue2, both used by ConcreteMediator? So, we can translate this into C# code as:

abstract class Mediator;

class ConcreteMediator: Mediator {

private ConcreteCollegue1 collegue1;

public ConcreteCollegue1 Collegue1 {

set {collegue1 = value;}

}

private ConcreteCollegue2 collegue2;

public ConcreteCollegue2 Collegue2 {

set {collegue2 = value;}

}

}

abstract class Collegue {

protected Mediator mediator;

public Collegue(Mediator mediator) {

this.mediator = mediator;

}

}

class ConcreteCollegue1: Collegue {

public ConcreteCollegue1(Mediator mediator): base(mediator){}

}

class ConcreteCollegue2: Collegue {

public ConcreteCollegue2(Mediator m): base(mediator){}

}

main() {

ConcreteMediator m = new ConcreteMediator();

ConcreteCollegue1 c1 = new ConcreteCollegue1(m);

ConcreteCollegue1 c2 = new ConcreteCollegue2(m);

m.Collegue1 = c1;

m.Collegue2 = c2;

}

That's all information we can extract from this diagram, for some other details we'd need some other diagrams, like a sequence diagram. Also, we considered some best practices for C#, like creating only public setters. In ConcreteMediator we have access to collegue1 & collegue2 private properties which can be used from whatever functionality we implement in that mediator class.

On the website you can see a full C# implementation.

Getting used to "read" class diagrams may help understanding and implementing design patterns, because I think it's easier to remember differences and details of various patterns graphically instead of the implementation code. Also, in many cases is more important to understand the classes, interfaces and relationships between them instead of some kind of implementation, which can slightly vary from project to project. For instance, you can use public properties or fields, or constructor injection on how the objects are created and passed around. Even for mediator example above there may be other solutions that does similar functionality, but the pattern itself is described by the class diagram, not by implementation details.

Friday, March 22, 2019

Uninstalling .NET Core 3.0 preview3

A few days ago I installed VS 2019 (preview, on Mac OS) with .NET Core 3.0 preview 3 (latest, at this time) and all instances of Visual Studio (including 2017) stopped working when application run in the IDE. It worked from CLI, but not the IDE, which was a nuisance. What was strange is that it didn't work for .NET Core 2.2 projects on VS 2017, even though .NET 3.0 was only for VS 2019.

The error was like:

I waited some time, but then the just decided to uninstall .NET Core 3.0 preview 3 following this:

$ dotnet --list-sdks

[...]

3.0.100-preview3-010431 /usr/local/share/dotnet/sdk

$ sudo rm -rf /usr/local/share/dotnet/sdk/3.0.100-preview3-010431

$ dotnet --list-runtimes

[...]

Microsoft.AspNetCore.App 3.0.0-preview3-19153-02 /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App

Microsoft.NETCore.App 3.0.0-preview3-27503-5 /usr/local/share/dotnet/shared/Microsoft.NETCore.App

$ sudo rm -rf /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App/Microsoft.AspNetCore.App 3.0.0-preview3-19153-02

$ sudo rm -rf /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App/Microsoft.NETCore.App 3.0.0-preview3-27503-5

(I also tried to install just the run-time, but didn't help)

Uninstalling a version of .NET Core is pretty easy, just delete the folder under /usr/local/share/dotnet/sdk and restart VS. Then check the IDE -> Tools -> SDK Manager under .NET Core:

Done. Now all my previous .NET Core 2.2 run again from IDE.

I'll definitely try later with another version of .NET Core 3.0.

The error was like:

/usr/local/share/dotnet/sdk/3.0.100-preview3-010431/Sdks/Microsoft.NET.Sdk/targets/Microsoft.PackageDependencyResolution.targets(5,5): Error MSB4018: The "ResolvePackageAssets" task failed unexpectedly.I searched online and found 2 open issues on GitHub related to this, #2460 & #2461 (see my comments there).

System.TypeLoadException: Could not resolve type with token 0100003f from typeref (expected class 'NuGet.Packaging.Core.PackageDependency' in assembly 'NuGet.Packaging, Version=5.0.0.4, Culture=neutral, PublicKeyToken=31bf3856ad364e35') [...]

I waited some time, but then the just decided to uninstall .NET Core 3.0 preview 3 following this:

$ dotnet --list-sdks

[...]

3.0.100-preview3-010431 /usr/local/share/dotnet/sdk

$ sudo rm -rf /usr/local/share/dotnet/sdk/3.0.100-preview3-010431

$ dotnet --list-runtimes

[...]

Microsoft.AspNetCore.App 3.0.0-preview3-19153-02 /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App

Microsoft.NETCore.App 3.0.0-preview3-27503-5 /usr/local/share/dotnet/shared/Microsoft.NETCore.App

$ sudo rm -rf /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App/Microsoft.AspNetCore.App 3.0.0-preview3-19153-02

$ sudo rm -rf /usr/local/share/dotnet/shared/Microsoft.AspNetCore.App/Microsoft.NETCore.App 3.0.0-preview3-27503-5

(I also tried to install just the run-time, but didn't help)

Uninstalling a version of .NET Core is pretty easy, just delete the folder under /usr/local/share/dotnet/sdk and restart VS. Then check the IDE -> Tools -> SDK Manager under .NET Core:

Done. Now all my previous .NET Core 2.2 run again from IDE.

I'll definitely try later with another version of .NET Core 3.0.

Wednesday, March 13, 2019

Traefik getting started

After previous post I've really wanted to get started and do some testing with Traefik.io. I started a bit on a wrong foot, but eventually all worked out fine. Many times, having some issues with a new tool or technology is best way to get you digging into the details and learn a lot.

For beginning I wanted to use it as a simple reverse proxy, similarly to NGINX. I installed Traefik locally (very simple, just an executable) and used my SiteGo test project, both as a simple local application and using Docker version:

docker run -t -i -p 8000:8000 ovicrisan/sitego

Then I created sitego.toml file needed for Traefik configuration, like this:

[entryPoints]

[entryPoints.http]

address = ":8081"

[frontends]

[frontends.frontend1]

backend = "backend1"

[frontends.frontend1.routes.test_1]

rule = "Host:localhost"

[backends]

[backends.backend1]

[backends.backend1.servers.server1]

url = "http://localhost:8000"

[file]

#[api]

And start it locally with

traefik -c sitego.toml --accessLog

-c is for config file ('sitego.toml'), and --accessLog to show in terminal / console all requests.

Opening in the browser with http://localhost:8081 worked fine

Very important note here: don't forget [file] config setting, otherwise you'll get an 404 error ("page not found") with the message "backend not found". That setting will define a 'provider' which is necessary for all thing to work. There is another option to pass all config settings as parameters in command line (or Docker command), but for simple projects I prefer TOML file.

You can enable / disable [api] to access Web UI (dashboard) for metrics and stuff, then just load it at http://localhost:8080.

Intentionally I didn't want to use it from Docker container, no TLS (https) and other details, just to keep it to a bare minimum. I'll definitely use it for much more, including some ASP.NET Core microservices.

For beginning I wanted to use it as a simple reverse proxy, similarly to NGINX. I installed Traefik locally (very simple, just an executable) and used my SiteGo test project, both as a simple local application and using Docker version:

docker run -t -i -p 8000:8000 ovicrisan/sitego

Then I created sitego.toml file needed for Traefik configuration, like this:

[entryPoints]

[entryPoints.http]

address = ":8081"

[frontends]

[frontends.frontend1]

backend = "backend1"

[frontends.frontend1.routes.test_1]

rule = "Host:localhost"

[backends]

[backends.backend1]

[backends.backend1.servers.server1]

url = "http://localhost:8000"

[file]

#[api]

And start it locally with

traefik -c sitego.toml --accessLog

-c is for config file ('sitego.toml'), and --accessLog to show in terminal / console all requests.

Opening in the browser with http://localhost:8081 worked fine

Very important note here: don't forget [file] config setting, otherwise you'll get an 404 error ("page not found") with the message "backend not found". That setting will define a 'provider' which is necessary for all thing to work. There is another option to pass all config settings as parameters in command line (or Docker command), but for simple projects I prefer TOML file.

You can enable / disable [api] to access Web UI (dashboard) for metrics and stuff, then just load it at http://localhost:8080.

Intentionally I didn't want to use it from Docker container, no TLS (https) and other details, just to keep it to a bare minimum. I'll definitely use it for much more, including some ASP.NET Core microservices.

Monday, March 11, 2019

NGINX (to Join F5) vs Traefik

I've read today's news, NGINX to Join F5: Proud to Finish One Chapter and Excited to Start the Next, which made me think again about what may come next. Probably Traefik.io.

I'm using NGIX for some years, mostly for some small websites (PHP), but also as reverse proxy for ASP.NET Core apps. I like that has a small footprint and open source version was enough for what I needed. It's not difficult to setup and upstream servers are pretty good for load balancing.

Of course I've read "And make no mistake about it: F5 is committed to keeping the NGINX brand and open source technology alive", and probably will continue for years to come this way, but still, this may be a good chance to look with different eyes to Traefik.

I didn't use it for anything, yet, but I'll try it very soon, because from what I've read and seen, it seems better fit for microservices / Docker / K8s clusters and the like. Looks like better integrated with lots of backends and excellent features (like Let's Encrypt automatic support + metrics).

I'm not saying bye to NGIX, but welcome Traefik. See this picture, isn't it cool?

I'm using NGIX for some years, mostly for some small websites (PHP), but also as reverse proxy for ASP.NET Core apps. I like that has a small footprint and open source version was enough for what I needed. It's not difficult to setup and upstream servers are pretty good for load balancing.

Of course I've read "And make no mistake about it: F5 is committed to keeping the NGINX brand and open source technology alive", and probably will continue for years to come this way, but still, this may be a good chance to look with different eyes to Traefik.

I didn't use it for anything, yet, but I'll try it very soon, because from what I've read and seen, it seems better fit for microservices / Docker / K8s clusters and the like. Looks like better integrated with lots of backends and excellent features (like Let's Encrypt automatic support + metrics).

I'm not saying bye to NGIX, but welcome Traefik. See this picture, isn't it cool?

Unit testing protected async method with Moq, XUnit and ASP.NET Core

There are a few good examples online about using protected methods in XUnit tests using Moq, but this example will combine it with async/await, for a more realistic sample.

We'll work with the default Visual Studio template, just adding few minimal lines of code, with an additional project for unit testing.

First of, we add a new HomeService.cs class with an interface having 2 methods to increment and decrement a given value:

public interface IHomeService

{

Task<int> IncrAsync(int i);

Task<int> DecrAsync(int i);

}

public class HomeService : IHomeService

{

public Task<int> DecrAsync(int i)

{

return OpAsync(i, -1);

}

public Task<int> IncrAsync(int i)

{

return OpAsync(i, 1);

}

protected async Task<int> OpAsync(int i, int step)

{

return await Task.Run(async () =>

{

await Task.Delay(3000);

//Thread.Sleep(3000);

return i + step;

});

}

}

Here, we wanted to use a protected (non-public) method used by both public interface methods, for simplicity. There are many times when a protected method make sense, for various reasons.

Instead of using the blocking Thread.Sleep() I used awaitable Task.Delay(), waiting for 3 seconds. Note that without await keyword the task is not blocking, it just continue immediately. When both IncrAsync() and DecrAsync() are called the code waits for 3 + 3 seconds, which is very noticeable in unit testing.

We also add IHomeService to ASP.NET Core dependency injection, so in Startup.cs in ConfigureServices() we have this (let's say we need a scoped lifetime):

services.AddScoped<IHomeService, HomeService>();

Then, let's use it in default About action of HomeController:

public async Task<IActionResult>

About([FromServices] IHomeService homeService)

{

int n = await homeService.IncrAsync(4);

ViewData["Message"] = "Your application description page.4"

+ await homeService.DecrAsync(n);

return View();

}

As you can see, we call once the service to increment default 4 value, then call it again to decrement it to original value.

Now, let's switch to the unit testing project and after adding a reference to the MVC project let's write some tests for the service itself. In HomeServiceTests.cs we may have

public class HomeServiceTests

{

[Fact]

public async void IHomeService_Incr_Success_With_Delay()

{

IHomeService homeService = new HomeService();

int n = await homeService.IncrAsync(2);

Assert.Equal(3, n);

}

[Fact]

public async Task IHomeService_Incr_Success_No_Delay()

{

var mock = new Mock<HomeService>();

mock.Protected().Setup<Task<int>>("OpAsync", ItExpr.IsAny<int>(),

ItExpr.IsAny<int>()).Returns(Task.FromResult<int>(3));

int n = await mock.Object.IncrAsync(2);

Assert.Equal(3, n);

}

}

First test, IHomeService_Incr_Success_With_Delay(), is just calling the service methods which will wait for those 3 seconds.

For second test we want to skip that waiting by mocking OpAsync() method. Don't forget that OpAsync() is protected, so we can't just call it. For this, we use Moq.Protected, but still some changes to the code are necessary. Most importantly, we have to declare OpAsync() as virtual, so its signature in HomeService.ca will be:

If we want to test the controller itself (HomeController) we can have in a HomeControllerTests.cs something like this:

public class HomeControllerTests

{

[Fact]

public async void HomeController_About_Success_With_Delay()

{

var homeController = new HomeController();

IHomeService homeService = new HomeService();

var x = await homeController.About(homeService) as ViewResult;

Assert.NotNull(x);

Assert.True(x.ViewData["Message"].ToString() ==

"Your application description page.4");

}

[Fact]

public async Task HomeController_About_Success_No_Delay()

{

var mock = new Mock<HomeService>();

mock.Protected().Setup<Task<int>>("OpAsync", ItExpr.IsAny<int>(),

ItExpr.IsAny<int>()).Returns(Task.FromResult<int>(4));

var homeController = new HomeController();

var x = await homeController.About(mock.Object) as ViewResult;

Assert.NotNull(x);

Assert.True(x.ViewData["Message"].ToString() ==

"Your application description page.4");

}

}

Again, first test is just calling the action waiting for 3 + 3 seconds, while the second test mock OpAsync() similarly to HomeServiceTests. For asserts we can use directly the ViewData[] value.

The alternative would be that you manually create a class inheriting from HomeService with a public method that just call the base protected OpAsync(), but using the Moq built-in functionality may be quicker to develop.

It's true this code doesn't follow strictly SOLID principles, especially the D part ("One should depend upon abstractions, not concretions") because we tested mostly here the concrete HomeService class instead of IHomeService interface, but this is an example about testing protected methods which only make sense in classes, not interfaces (which are supposed to be public).

We'll work with the default Visual Studio template, just adding few minimal lines of code, with an additional project for unit testing.

First of, we add a new HomeService.cs class with an interface having 2 methods to increment and decrement a given value:

public interface IHomeService

{

Task<int> IncrAsync(int i);

Task<int> DecrAsync(int i);

}

public class HomeService : IHomeService

{

public Task<int> DecrAsync(int i)

{

return OpAsync(i, -1);

}

public Task<int> IncrAsync(int i)

{

return OpAsync(i, 1);

}

protected async Task<int> OpAsync(int i, int step)

{

return await Task.Run(async () =>

{

await Task.Delay(3000);

//Thread.Sleep(3000);

return i + step;

});

}

}

Here, we wanted to use a protected (non-public) method used by both public interface methods, for simplicity. There are many times when a protected method make sense, for various reasons.

Instead of using the blocking Thread.Sleep() I used awaitable Task.Delay(), waiting for 3 seconds. Note that without await keyword the task is not blocking, it just continue immediately. When both IncrAsync() and DecrAsync() are called the code waits for 3 + 3 seconds, which is very noticeable in unit testing.

We also add IHomeService to ASP.NET Core dependency injection, so in Startup.cs in ConfigureServices() we have this (let's say we need a scoped lifetime):

services.AddScoped<IHomeService, HomeService>();

Then, let's use it in default About action of HomeController:

public async Task<IActionResult>

About([FromServices] IHomeService homeService)

{

int n = await homeService.IncrAsync(4);

ViewData["Message"] = "Your application description page.4"

+ await homeService.DecrAsync(n);

return View();

}

As you can see, we call once the service to increment default 4 value, then call it again to decrement it to original value.

Now, let's switch to the unit testing project and after adding a reference to the MVC project let's write some tests for the service itself. In HomeServiceTests.cs we may have

public class HomeServiceTests

{

[Fact]

public async void IHomeService_Incr_Success_With_Delay()

{

IHomeService homeService = new HomeService();

int n = await homeService.IncrAsync(2);

Assert.Equal(3, n);

}

[Fact]

public async Task IHomeService_Incr_Success_No_Delay()

{

var mock = new Mock<HomeService>();

mock.Protected().Setup<Task<int>>("OpAsync", ItExpr.IsAny<int>(),

ItExpr.IsAny<int>()).Returns(Task.FromResult<int>(3));

int n = await mock.Object.IncrAsync(2);

Assert.Equal(3, n);

}

}

First test, IHomeService_Incr_Success_With_Delay(), is just calling the service methods which will wait for those 3 seconds.

For second test we want to skip that waiting by mocking OpAsync() method. Don't forget that OpAsync() is protected, so we can't just call it. For this, we use Moq.Protected, but still some changes to the code are necessary. Most importantly, we have to declare OpAsync() as virtual, so its signature in HomeService.ca will be:

protected virtual async Task<int> OpAsync(int i, int step)Also notice the way how we mock that async method (returning Task<int>) and declaring parameters with ItExpr.IsAny< >(), instead of just It.IsAny< >.

If we want to test the controller itself (HomeController) we can have in a HomeControllerTests.cs something like this:

public class HomeControllerTests

{

[Fact]

public async void HomeController_About_Success_With_Delay()

{

var homeController = new HomeController();

IHomeService homeService = new HomeService();

var x = await homeController.About(homeService) as ViewResult;

Assert.NotNull(x);

Assert.True(x.ViewData["Message"].ToString() ==

"Your application description page.4");

}

[Fact]

public async Task HomeController_About_Success_No_Delay()

{

var mock = new Mock<HomeService>();

mock.Protected().Setup<Task<int>>("OpAsync", ItExpr.IsAny<int>(),

ItExpr.IsAny<int>()).Returns(Task.FromResult<int>(4));

var homeController = new HomeController();

var x = await homeController.About(mock.Object) as ViewResult;

Assert.NotNull(x);

Assert.True(x.ViewData["Message"].ToString() ==

"Your application description page.4");

}

}

Again, first test is just calling the action waiting for 3 + 3 seconds, while the second test mock OpAsync() similarly to HomeServiceTests. For asserts we can use directly the ViewData[] value.

The alternative would be that you manually create a class inheriting from HomeService with a public method that just call the base protected OpAsync(), but using the Moq built-in functionality may be quicker to develop.

It's true this code doesn't follow strictly SOLID principles, especially the D part ("One should depend upon abstractions, not concretions") because we tested mostly here the concrete HomeService class instead of IHomeService interface, but this is an example about testing protected methods which only make sense in classes, not interfaces (which are supposed to be public).

Subscribe to:

Posts (Atom)